A few weeks ago I got started with Tensorflow and covered Tensors and operations. This week I’m going to continue to cover the basic building blocks of Tensorflow and then go over an interactive example that incorporates these elements.

Tensors – These are basically shaped collections of numbers. They can be multi-dimensional (array of arrays) or a single value. Tensors are all immutable which means they cant be changed once created and require manual disposal to avoid memory leaks in your application.

// 2x3 Tensor

const shape = [2, 3]; // 2 rows, 3 columns

const a = tf.tensor([1.0, 2.0, 3.0, 10.0, 20.0, 30.0], shape);

a.print(); // print Tensor values

// Output: [[1 , 2 , 3 ],

// [10, 20, 30]]

const c = tf.tensor2d([[1.0, 2.0, 3.0], [10.0, 20.0, 30.0]]);

c.print();

// Output: [[1 , 2 , 3 ],

// [10, 20, 30]]

Operations – An operation is just a mathematical function that can be used on a tensor. These include multiplication, addition, and subtraction.

const d = tf.tensor2d([[1.0, 2.0], [3.0, 4.0]]);

const d_squared = d.square();

d_squared.print();

// Output: [[1, 4 ],

// [9, 16]]

Models & Layers – A model is a function that performs some set of operations on tensors to produce a desired output. These can be constructed using plain operations but there are also a lot of built in models with Tensorflow,js that rely on established learning and statistical methods.

// Define function

function predict(input) {

// y = a * x ^ 2 + b * x + c

// More on tf.tidy in the next section

return tf.tidy(() => {

const x = tf.scalar(input);

const ax2 = a.mul(x.square());

const bx = b.mul(x);

const y = ax2.add(bx).add(c);

return y;

});

}

// Define constants: y = 2x^2 + 4x + 8

const a = tf.scalar(2);

const b = tf.scalar(4);

const c = tf.scalar(8);

// Predict output for input of 2

const result = predict(2);

result.print() // Output: 24

Memory Management – Tensorflow.js uses the GPU on your computer to handle most of the operations which means that typical garbage collection isn’t available. Tensorflow therefore includes the tidy and dispose methods that allow you to dump unused tensors out of memory

// tf.tidy takes a function to tidy up after

const average = tf.tidy(() => {

// tf.tidy will clean up all the GPU memory used by tensors inside

// this function, other than the tensor that is returned.

//

// Even in a short sequence of operations like the one below, a number

// of intermediate tensors get created. So it is a good practice to

// put your math ops in a tidy!

const y = tf.tensor1d([1.0, 2.0, 3.0, 4.0]);

const z = tf.ones([4]);

return y.sub(z).square().mean();

});

average.print() // Output: 3.5

As with my last post much of this material comes from Tensorflow.js Getting Started and Dan Shiffman’s The Coding Train series.

learning!

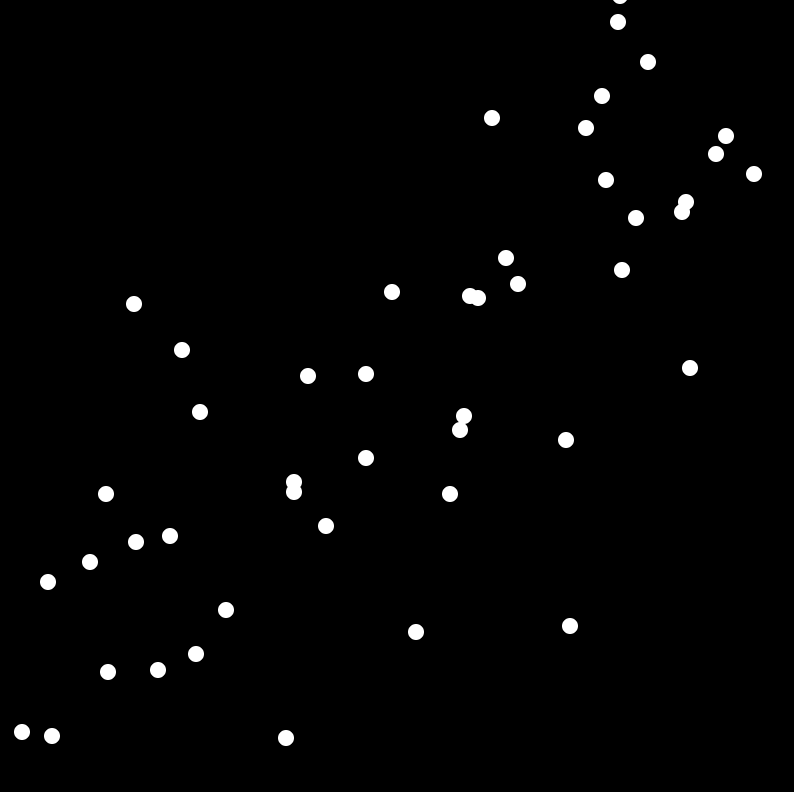

How do we learn about these points?

We need:

- A dataset

- A loss function (how wrong are we?)

- An optimizer (how do we improve?)

- A predict function (how do we demonstrate what we’ve learned)

Simple Linear Regression:

In this case (linear regression) we need a bunch of X and Y values that we can plot. Lets say the X represents the age of a building and the Y represents the value. We establish arrays to hold these points.

let x_vals = [];

let y_vals = [];

Since we’re modeling a line we need to find a slope and a Y-intercept to plug our x and y values into (Y=m*X +b)

let m, b;

Now we select the sort of optimizer we want to use and set a learning rate. (this comes from the tensorflow.js library and a bunch of different methods can be found there). In this case Stochastic gradient descent (‘sgd’). The learning rate will tell the optimizer how aggressive to be when doing its optimization. We don’t want our optimizer to react too much to any one datapoint so this value should usually be less than 0.5.

const learningRate = 0.5;

const optimizer = tf.train.sgd(learningRate);

Since we are trying to learn the slope(m) and Y intercept of our line(b) we need to hand the optimizer something to optimize. Here we initialize m and b to random values that will get continuously adjusted as our program learns. Were also going to create a canvas to help with visualizations. (This comes from the p5.js library)

function setup() {

createCanvas(400, 400);

m = tf.variable(tf.scalar(random(1)));

b = tf.variable(tf.scalar(random(1)));

}

Now we want to define a function to figure out how wrong our currently random slope and intercept values are compared to our dataset

function loss(pred, labels) {

return pred.sub(labels).square().mean();

}

This next function is where we apply what we’ve learned from out loss function and our optimizer. We need a best guess for what our building value (Y) is given any age(X).

function predict(x) {

const xs = tf.tensor1d(x);

// y = mx + b;

const ys = xs.mul(m).add(b);

return ys;

}

We can now populate our learning dataset by using mouse clicks on the canvas (again, this is P5.js)

function mousePressed() {

let x = map(mouseX, 0, width, 0, 1);

let y = map(mouseY, 0, height, 1, 0);

x_vals.push(x);

y_vals.push(y);

}

This next while loop (called draw) uses some p5 goodness to animate this project. You can see the Predict function being called and tensors being garbage collected with the dispose and tidy functions. The only other tensorflow function we use here is a memory function to make sure we have no leaks and dataSync() to pull the X and Y values out of the tensorflow objects. Importantly this could be set up using promises. However this example is simple and we should get values fast enough to render properly.

function draw() {

tf.tidy(() => {

if (x_vals.length > 0) {

const ys = tf.tensor1d(y_vals);

optimizer.minimize(() => loss(predict(x_vals), ys));

}

});

background(0);

stroke(255);

strokeWeight(8);

for (let i = 0; i < x_vals.length; i++) { let px = map(x_vals[i], 0, 1, 0, width); let py = map(y_vals[i], 0, 1, height, 0); point(px, py); } const lineX = [0, 1]; const ys = tf.tidy(() => predict(lineX));

let lineY = ys.dataSync();

ys.dispose();

let x1 = map(lineX[0], 0, 1, 0, width);

let x2 = map(lineX[1], 0, 1, 0, width);

let y1 = map(lineY[0], 0, 1, height, 0);

let y2 = map(lineY[1], 0, 1, height, 0);

strokeWeight(2);

line(x1, y1, x2, y2);

console.log(tf.memory().numTensors);

//noLoop();

}

See the Pen wXzwdY by jesse (@SuperJesse) on CodePen.

Polynomial version:

See the Pen yEaBpB by jesse (@SuperJesse) on CodePen.