In thinking about my PComp final I’ve come up with an idea for a honk detecting street sign. The goal of this sign would be two fold: to collect data on honking at various intersections, and then to discourage unnecessary honking by providing a visual reaction to individual honks and longer term trends. I came to this idea while listening to the amazing amount of honking that takes place outside my window on the Queensboro bridge. The prototypical bystander reaction to the frustration of incessant honking is to scream obscenities out of your window. I totally get this urge. However as a means of changing the behavior of drivers its almost completely ineffective. I take this as a sign of an unmet need; people want to communicate the frustration of living around a honk ridden intersection to the individual drivers who are presumably just passing through with little awareness to the plight of nearby residents. My hope is that this sign can communicate the collective feeling of neighborhood residents to the individual driver who isn’t aware of the compounding effect of their honking. I believe that such a sign will be effective because in my time spent listening to honking cars (against my will) I have learned that the decision to honk is highly informed by social dynamics.

Often honking will happen in clusters, as one particularly hurried or irritable driver starts the chain reaction by removing the slight stigma that exists. Eventually others will follow suit as if to signal agreement in their displeasure with sitting in traffic. In extreme cases when certain drivers feel their honks are being ignored (something I find insane) they will honk out a tune or simply hold down the horn until their non-verbal demands are met. Since honking operates within some discernible social system, I think this system can be altered by injecting a sign of displeasure to honking. Hopefully the number of “first honkers” can be reduced as they see statistics on honking or a visual representation of how their honks make residents in the area feel. For the “ignored honkers” I hope they will feel some slight shame or guilt when they see how honky of a day its been already, ideally they’ll realize that NYC traffic does not operate according to the whims of the individual and that their attempts to honk their way out of traffic is a tried and failed method.

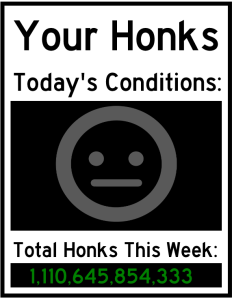

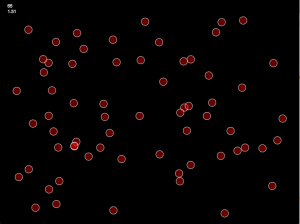

Here is my digital mock up of how the physical sign could look and function.

https://11bsouth.com/HonkSignMockUp/